Security threats to AI models are giving rise to a new crop of startups

AI's rapid adoption highlights security issues, prompting startups such as Zendata and Credo AI to address data leakage and unauthorized access risks.

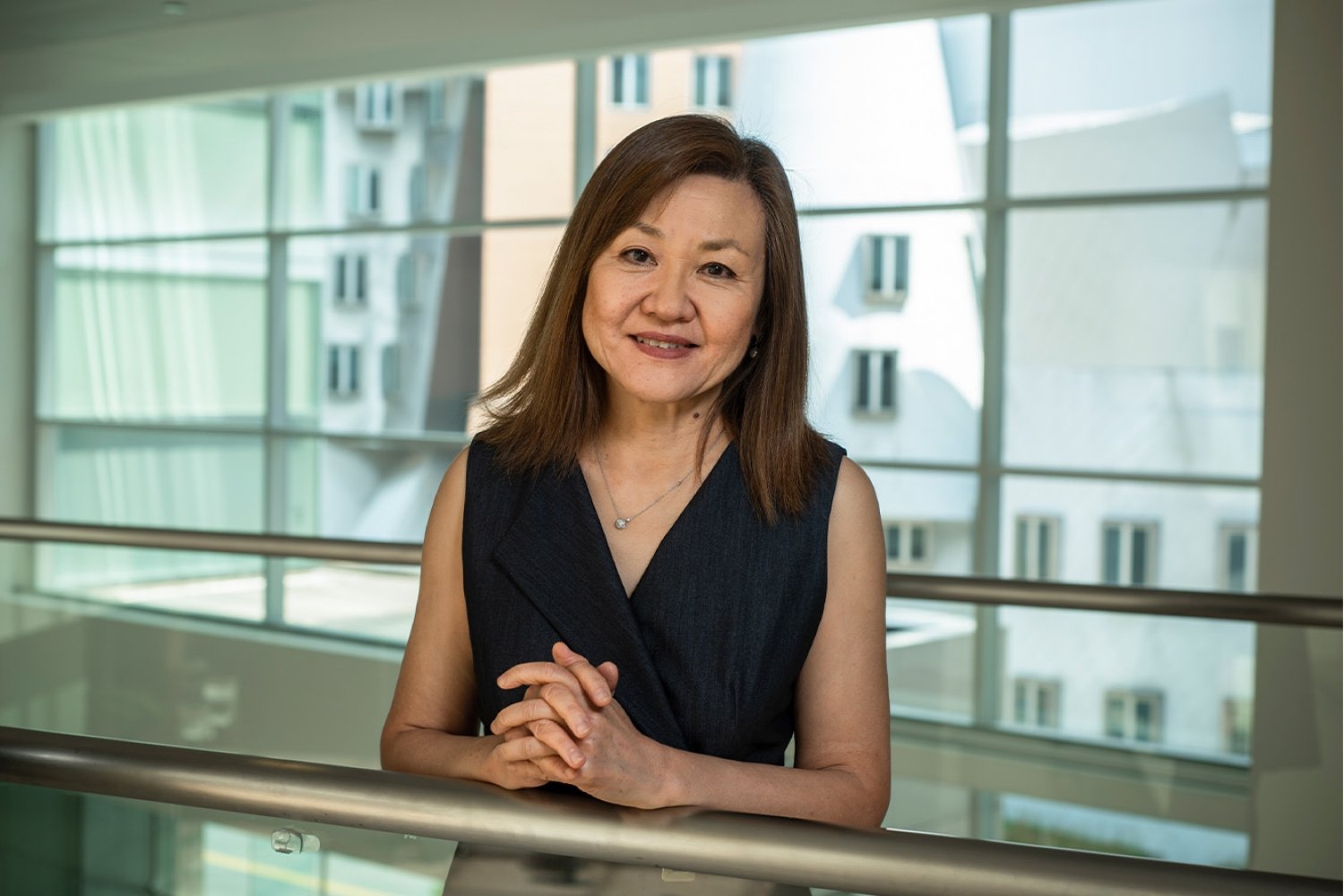

Getty Images

- Security and privacy is a growing concern as companies adopt AI.

- Companies strive to protect against malicious attacks and follow strict data compliance standards.

- Startups like Opaque Systems and Hidden Layer are aimed at tackling these security threats.

As more companies adopt using AI, it has brought to light issues regarding security and privacy.

AI models get trained on massive datasets that can include personal or sensitive information, making it critical for companies — particularly those in highly regulated industries like finance and healthcare with strict data compliance rules — to ensure that AI systems are safe from potential threats like leaked or poisoned data.

The hazards against AI models and the data that train them are growing in the age of large language models. "It's so easy for an employee to take something that's confidential and share it with an LLM," said Ashish Kakran of Thomvest Ventures. "LLMs do not have, as of right now, a forget it kind of button…You need safeguards and controls around all of this in the way these LLMs are deployed in production."

What's emerged is a growing group of startups aimed at tackling security threats related to AI. "This is actually a gap," Kakran added. "But that's also an opportunity for startups."

Thomvest-backed Opaque Systems, for instance, enables companies to share private data through a confidential computing platform to accelerate AI adoption. Credo AI, which has raised $41.3 million in total funding, is an AI governance platform that helps companies adopt AI responsibly by measuring and monitoring its risks.

Another startup, Zendata, has raised over $3.5 million and focuses on preventing leakage of sensitive data when integrating AI into enterprise systems and workflows. Companies can be vulnerable to attacks through a compromised third-party AI vendor if attackers gain unauthorized access to confidential data. Companies "don't really know if some shadow AI applications are in place and a bunch of user information is being sent to that," said Zendata CEO Narayana Pappu.

Pappu added that customers may not be aware that the shared information may also be used to train broader AI models. "There's a cross-use of information. There's information leakage, all of that. And that's a huge concern with the foundation models or even copilots."

Startups like Glean, an enterprise AI startup valued at $4.6 billion, are incorporating security principles, such as strict permissioning control, into their core products. "Glean's AI assistant is fully permissions-aware and personalized, only sourcing information the user has explicit access to," said CEO Arvind Jain. This ensures that users only access the information they are authorized to see, preventing any unintended access or misuse of the data.

Adversarial attacks are also a growing concern when it comes to deploying AI. Attackers can exploit vulnerabilities in a system for malicious purposes, leading to harmful or flawed results.

Bessemer Venture Partners' Lauri Moore noted that when evaluating AI tools, many security leaders often ask whether it will introduce a critical vulnerability or trap door that a bad actor could use.

A nightmare scenario could be a coding agent introducing code with security vulnerabilities, Moore said. She highlighted an instance when a developer took control of an open-source project after gaining increasing credibility, only to hijack it.

Another threat known as prompt injections has become increasingly popular with the rise of prompt-based language models. These attacks trick a model into producing a harmful output based on harmful instructions. Haize Labs, which raised a hot investment round this summer, tackles this problem through AI red-teaming, which involves stress-testing large language models for any vulnerabilities based on system guardrails.

To address the growing number of security concerns in AI, startups are now applying continuous monitoring to the space. Continuous monitoring systems assess model behavior, monitor for security breaches, and score models based on their performance and safety, said Arvind Ayyala, partner at Geodesic Capital.

Protect AI, which raised a $60 million Series B from Evolution Equity in August, focuses on continuously monitoring and managing security across the AI supply chain to minimize the risk of attacks. Hidden Layer, which automatically detects and responds to threats, raised a $50 million Series A from M12 and Moore Strategic Ventures last year.

"This shift towards automated, continuous evaluation represents a significant evolution in AI safety practices, moving beyond periodic manual assessments to a more proactive and comprehensive approach," Ayyala said.

What's Your Reaction?